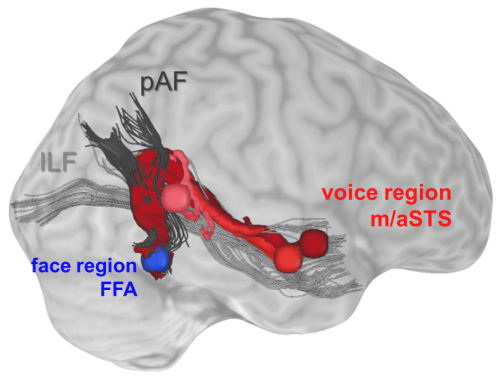

By combining fMRI with diffusion-weighted imaging we could show that the brain is equipped with direct structural connections between face- and voice-recognition areas to activate learned associations of faces and voices even in unimodal conditions to improve person-identity recognition.

Read more

What kind of information is exchanged between these specialized areas during cross‐modal recognition of other individuals? To address this question, we used functional magnetic resonance imaging and a voice‐face priming design. In this design, familiar voices were followed by morphed faces that matched or mismatched with respect to identity or physical properties. The results showed that responses in face‐sensitive regions were modulated when face identity or physical properties did not match to the preceding voice. The strength of this mismatch signal depended on the level of certainty the participant had about the voice identity. This suggests that both identity and physical property information was provided by the voice to face areas.

Blank, H., Anwander, A., & von Kriegstein, K. (2011). Direct structural connections between voice- and face-recognition areas. The Journal of Neuroscience, 31(36), 12906-12915 https://doi.org/10.1523/JNEUROSCI.2091-11.2011

Blank, H., Kiebel, S. J. & von Kriegstein, K. (2015). How the human brain exchanges information across sensory modalities to recognize other people. Human Brain Mapping, 36(1), 324-39